What happens when the scientific method observes itself?

When a study revealed problems with fMRI data, the media’s response was fast and furious.

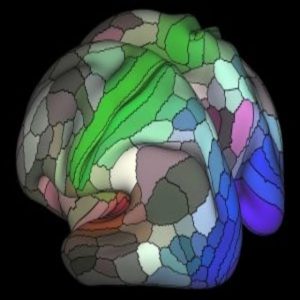

Study authors found that maps based on simulated resting state data are subject to a high (70%) Familywise Error (FEW) rate. This map is based on data from resting state fMRI scans performed as part of the Human Connectome Project.

Credit: Matthew Glasser and David Van Essen, Washington University

When a study published in the Proceedings of the National Academy of the Sciences this past July discovered errors in three popular functional magnetic resonance imaging (fMRI) software packages, the media and the blogosphere rushed to the presses. The backlash was fast, ferocious, and unexpected.

“15 years of brain research has been invalidated by a software bug,” said the International Business Times.

In fact, fMRI has never been a neat and tidy technique said Thomas Nichols, a professor at the University of Warwick in England and one of the study authors. That’s why Nichols and colleagues Anders Eklund and Hans Knutsson, researchers at Linkoping University in Sweden, reviewed years of fMRI research to verify whether the known accepted rate of false positives in any given brain scan is reliable. What they found both surprised them and stoked the fires of controversy among their peers and science writers alike.

In the study, entitled “Cluster Failure: Why fMRI inferences for spatial extent have inflated false-positive rates,” the authors asserted 2 numbers with seemingly great importance: 70%, as the false-positive rate for the 3 main software packages used in fMRI research; and 40,000, as the number of published papers that may be affected.

Eklund first thought the study would be rather boring, but once he saw the size of the error and the number of studied implicated, he thought “this is big.”

fMRI measures blood flow, metabolism, and oxygenation to create a map of the brain. To do this, it uses voxels, cubes approximately 3 cm on each side, set in 3-D space in relation to ~1 million other voxels (for the human brain). Because each brain varies slightly in morphology, the fMRI software massages each scan to fit into a broader template using large swaths of simulated data to set the template parameters—and it is here that potential errors lie. By making certain assumptions as it creates each brain map, fMRI may yield false-positive results when measuring task-based brain activity. The accepted value for these false-positives, called Familywise Error (FWE), is 5%.

The authors tried to verify the FWE by using actual resting-state fMRI data gleaned from data shares with scientists around the globe instead of the software-generated null data, which is supposed to simulate the resting state of a large population sample. What they found was shocking. Eklund, Nichols, and Knutsson discovered a FWE rate of 70%, casting more than a shadow of a doubt over a wide range of published papers from the last few decades.

However, while the media and bloggers focused on the seemingly staggering numbers, the reality was far less glaring than the errors the study exposed.

“We enumerated a large number of studies by making certain assumptions,” said Nichols. The results were determined to lie in cluster analyses, in which the software measures activity across regions of voxels instead of individual points in space. Nichols and his colleagues submitted a correction to the paper that revised the estimated number of affected papers to only 3500 previous studies, a significant number but nowhere near their original assessment of 40,000 suspect studies.

Additionally, said Nichols, there is a great deal of good brain research being conducted using other imaging techniques such as positron emission topography. And, he continued, there’s a large amount of research going on without any imaging technology at all.

In contrast to what turned out to be hyperbolic media coverage, the three companies that make the fMRI software were good-natured upon publication of the study. All are now working to remove the bugs identified in this study from their software packages.