Under the sea? How coral reef health monitoring may come ashore

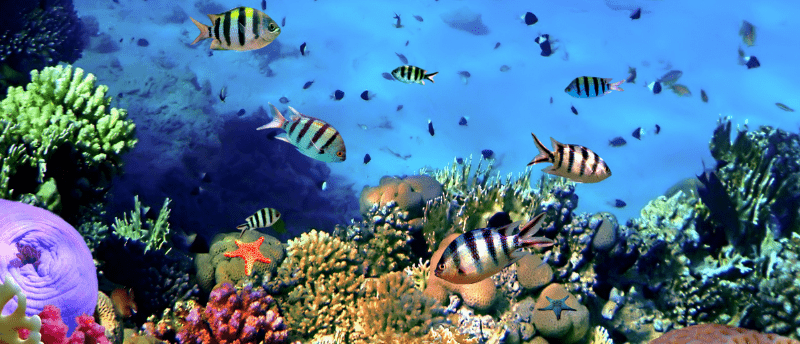

A new AI method that can accurately detect coral reef health through sound recordings may remove the need for intensive visual studies by expert divers.

A team of researchers from the University of Exeter (UK) has trained artificial intelligence (AI) to recognize the health of coral reefs from sound recordings. A reef’s soundscape can provide an indication of whether it is healthy or degraded, due to the presence (or absence) of noises made by various fish and other creatures. This would usually be identified by experts through comprehensive manual analysis, but this new AI method will lead to faster and more accurate coral reef health tracking.

Coral reefs are currently facing several threats including climate change, so the success of coral reef conservation projects is vital, and monitoring their health is an essential part of this. “One major difficulty is that visual and acoustic surveys of reefs usually rely on labor-intensive methods,” explained Ben Williams, the lead author of the study.

Williams continued: “Visual surveys are also limited by the fact that many reef creatures conceal themselves, or are active at night, while the complexity of reef sounds has made it difficult to identify reef health using individual recordings.”

Dolphins use corals and sponges to self-medicate skin conditions

Dolphins use corals and sponges to self-medicate skin conditions

Bottle-nose dolphins were observed to rub along corals from head to tail, which researchers believe is to achieve skin homeostasis or to treat microbial infections.

Leveraging machine learning techniques, the team trained a computer algorithm to tell the difference between healthy and degraded coral reefs using soundscapes related to both. The AI was then able to find patterns within the coral reefs’ sound recordings that are undetectable to humans, correctly identifying a reef’s health 92% of the time.

The coral reef soundscapes used in the study were supplied by the Mars Coral Reef Restoration Project, which is restoring heavily damaged reefs in Indonesia. The team hopes this new method will help to improve coral reef health monitoring for projects like the Mars Coral Reef Restoration Project.

It is often cheaper and easier to leave an underwater hydrophone (a microphone that records ocean sounds in all directions) to monitor reef health than for expert divers to survey the reef by regularly visiting them, especially if the reefs are in remote locations. Tim Lamont, a co-author based at Lancaster University (UK) concluded: “This is a really exciting development. Sound recorders and AI could be used around the world to monitor the health of reefs, and discover whether attempts to protect and restore them are working.”