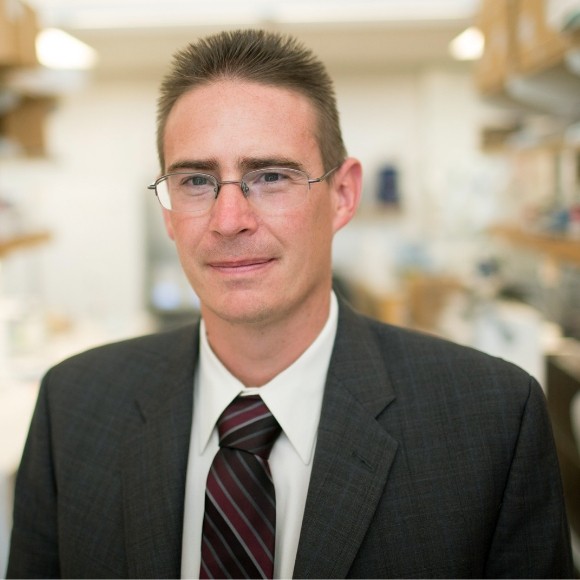

Michael Weinstein on the reproducibility of microbiome data

Michael Weinstein talks to BioTechniques about his work in bioinformatics and creating software to improve the accuracy and reproducibility of microbiome data.

Can you tell us a bit about yourself and what you’re doing at Zymo?

Initially my background was as a wet lab bench biologist. I spent years building mouse models and studying genetic disorders and metabolism. During my studies of genetic disorders, I started looking specifically at next-generation sequencing data, which got me into bioinformatics.

Initially my background was as a wet lab bench biologist. I spent years building mouse models and studying genetic disorders and metabolism. During my studies of genetic disorders, I started looking specifically at next-generation sequencing data, which got me into bioinformatics.

Currently, I spend a small portion of my time teaching bioinformatics workshops at UCLA (CA, USA). The rest of the time I work at Zymo (CA, USA) with the microbiome and bioinformatics team to improve the accuracy and reproducibility of microbiome data. Overall, my role at Zymo is developing bioinformatic applications that improve the utility of our innovations at the bench. As someone who worked at the bench for a decade and a half before transitioning primarily to informatics, this has been a very productive role for me. My current efforts are focused on improving microbiome pipelines from sample collection and preservation through extraction and even going on to analysis. This challenge requires a multidisciplinary approach and provides many exciting challenges daily.

Can you tell us about how researchers should go about analyzing their data in a reproducible, standardized manner?

A few of the major issues affecting microbiome analysis at the bench today are how methods and pipelines are being validated for accuracy, the need for quality controls at multiple steps in the pipeline, and the wide varieties of sample matrix that may be processed. On the bioinformatics side, we see difficulties setting up software on new systems in a reproducible manner, competing analysis tools, and users sometimes understanding how to work the software, but not how the software works.

At Zymo, one of our most important developments for the microbiome community and ourselves was the mock microbial standards of well-defined composition. At a recent meeting hosted by The National Institute for Standard and Technology (NIST; MD, USA), the need for microbial standard reference materials was brought up repeatedly as something the field requires to ensure the integrity and reproducibility of data generated. Internally, we utilize the standard and the analysis tool extensively when validating a component of a microbiome preparation pipeline, often running a validated pipeline with only a single experimental change in order to determine if that change increases or decreases the accuracy of the pipeline. Additionally, we always run some microbial standard quality control samples along with our experimental samples in order to ensure consistency and accuracy between different preparation runs. We offer it as a product for others needing to do the same. We recently released a new standard, designed specifically to represent predominant gut microbes. The use of this standard can make it much easier to ensure that microbiome methods, which are generally quantitative in nature, can be validated quantitatively instead of simply for presence/absence of microbes.

In order to facilitate the use of these standards, one of our recent efforts has been to develop automated analysis tools, known as the Measurement Integrity Quotient (MIQ) scores, that make it easy for the users to determine how accurate they are. At the same NIST meeting mentioned above, the need for methods to analyze standard reference materials that are reproducible, easy to run, and easy to understand was brought up many times. We believe we have met this challenge with these products.

Does the software require a large amount of training?

The software requires basic command line skills. If the user has Docker already set up on their computer, which is common on systems being used for bioinformatic analysis, the setup takes a few minutes. Docker enables everything to exist within this containerized operating system, so researchers don’t have to worry about installing other programs or managing dependencies. On a computer with Docker already installed and a fast internet connection, one can easily go from zero to starting an analysis in under 5 minutes.

Do you believe that the variety of software currently available is a problem?

I think the variety of software isn’t necessarily a problem; there is a wide variety of bioinformatic tasks that we need to accomplish, and that can require a variety of software. The problems come from software that is difficult to use due to missing documentation or dependencies and from having many programs to solve the same problem. Sometimes knowing the right software to use when there are multiple options for the same problem requires an in-depth understanding of both the kind of data expected and the inner workings of the software; a tool optimized for analyzing shotgun metagenomics on a highly complex sample may underperform on a low-complexity sample, and vice versa. Right now, there are well over a dozen different pipelines for analyzing shotgun metagenomic reads. Christopher Mason and several others published a paper in BMC Genome Biology in late 2017 that used simulated reads to test several of these pipelines and gain better understanding of their strengths and weaknesses. It can be difficult for the average user to keep track of what the optimal software is for their desired analysis and which software has fallen into disfavor or has been officially deprecated.

Targeted sequencing, such as 16S, currently has two competing pipelines with different software packages taking different approaches and generating different outputs. First, there’s the operational taxonomic units (OTU) approach, which is the classic clustering approach. There’s also a newer technique, the amplicon sequence variant (ASV) approach. We know that sequencers make errors – the OTU approach lowers taxonomic resolution a bit to take these errors out of focus. With this approach, you bin the microbes together based on a sequence similarity threshold and can ignore errors that are less than that threshold. The ASV approach goes in the opposite direction – it tries to increase the resolution by building an error model for the run and then asking for every observed amplicon sequence how likely is it to be the result of a sequencing error within the model, or how likely is it to be a real sequence from the sample. It is difficult to say that there is a correct and incorrect choice between these two approaches, as it depends on the goals of the user. The typical user complaint about the ASV approach is that it loses too much sensitivity to more rare sequences in exchange for higher specificity, while the main user complaint about the OTU approach is the opposite in that it tends to keep in more rare sequences, but some of them may be spurious. For a more experienced user, the QIIME2 pipeline offers the ability to choose between these approaches.

The soil microbiome: a ticking time bomb for climate change

The soil microbiome: a ticking time bomb for climate change

BioTechniques talks to microbiome expert Janet Jansson about the carbon density of the soil microbiomes around the world, how humans are damaging it and how we can begin to turn the tide.

What else are you doing in this space?

Most recently, we have developed and released a bioinformatics package for analyzing the accuracy of a metagenome analysis on a known-input standard sample by comparing the observed composition of microbes in the sample to the expected composition.

Because we can quickly and reproducibly scale this system and analyze accuracy relative to any standard with known relative microbial abundances, we have been able to test many different microbiome methods for their relative accuracy quickly and easily. One example of this application is running several dozen identical microbiome preparations with our microbial standard where only the bead mixture varied between replicates. Using this scoring system, we were able to determine which bead mixtures give the least biased lysis and the most accurate results. This was part of a larger study of hundreds of different lysis conditions and protocols, comparing accuracy and bias for each of them. We will be submitting this study for peer review in the very near future and will release preprints at the same time.

At Zymo, we have also committed ourselves to helping the field beyond simply providing materials to simplify nucleic acid preservation, extraction, and processing. We have been releasing educational videos and blogs to help our users understand scientific concepts related to applications such as high-throughput sequencing and RNA library preparation. We also have developed an internal culture of open-sourcing solutions when possible. Two recent examples of this are the MIQ scoring systems and FIGARO, a tool for finding optimized, reproducible trimming parameters to utilize in the DADA2 software pipeline.

What other challenges remain in this area?

One of the big challenges is that we cannot completely know what is in a naturally occurring sample. We cannot know exactly what state the different microbes are in, and we cannot know the exact composition of the sample. Additionally, microbial states such as biofilm are difficult to model in a known input standard because counting cells within a biofilm is substantial challenge.

I would say that probably the most impressive challenge I’ve seen – although this sounds a little boring when I describe it – is understanding the different effects of the variety of microbiome preservation, extraction, and analysis techniques both individually, and when combined in different ways. Using our new tools to test the biases created by techniques has given us an interesting view of how different studies can be compared to one another. I remember hearing in a news report—even before I started studying the microbiome—about the Human Microbiome Project (HMP) in the USA and MetaHIT in Europe: two studies looking at gut microbiomes in two different populations. These two studies reported major differences between the Europeans and Americans; everybody was wondering if it could be due to diet, lifestyle, genetics and so on. Later on, it was demonstrated that the observed could be explained by the differences in techniques used between the two groups. The methods employed in both studies were among those we tested, and upon observing the biases between them, the results made perfect sense.

There are several groups working to address these issues. Zymo Research is one of them, but there is a great effort being put forth here from multiple groups across different continents. In US, NIST, the Microbiome Quality Control project (MBQC), and the Metagenomics and Microbiome Research Group from the Association of Biomolecular Resource Facilities are helping lead the way. The International Human Microbiome Standards Project (IHMS) is working to foster collaboration on this around the world, and there are academic laboratories around the world working on this. I also recently attended a meeting of the Pharmabiotic Research Institute (PRI), who are working to help stakeholders work through the development and implementation of regulation on microbial therapeutics in the EU.

Do you have any ideas about how that might be tackled?

I think there is a lot of work to do. My PhD advisor is a professor from UCLA by the name of Stephen Young. He would frequently remind us to be careful of “wishing” for a desired result and be our own harshest critic. Nobody has ever wished themselves into more accurate or reproducible results. Improving the accuracy and the reproducibility between studies in the microbiome field will require hard work from many people. I believe that we are just in the early stages of developing a culture of “radical reproducibility” for the field. We have developed some easy to use and interpret benchmarking tools and standards in order to facilitate laboratories and core facilities checking the quality of their own pipelines both at creation and on an ongoing basis.

Talking Techniques | Rob Knight on precision medicine, scientific heroes and sage advice

Talking Techniques | Rob Knight on precision medicine, scientific heroes and sage advice

Rob Knight, founding Director of the Center for Microbiome Innovation and Professor of Pediatrics and Computer Science & Engineering at UC San Diego, speaks to BioTechniques Managing Editor, Joseph Martin.

What would you say has been the most exciting discovery made thus far in microbiomics?

I worked on a cancer genomics and immunotherapy project for a few years. I have been a big believer in the potential for immunotherapy to target some of the more “evasive” cancers that seem to mutate rapidly and evolve around whatever traditional therapies we throw at them. The results coming from Jennifer Wargo’s laboratory (University of Texas MD Anderson Cancer Center, USA) and other groups on the role of the tumor and gut microbiomes in cancer treatment are fascinating to me and I am always eagerly awaiting the next publication on this topic.

Likewise, I worked for years on metabolic syndrome and cardiovascular disease, and the gut microbiome was only starting to be a topic of discussion. Given the importance of diet in these disorders, and the fact that the gut microbiome can often alter how nutrients are presented to the host, I eagerly await the opportunity to see what role the gut microbiome plays in metabolic syndrome, heart disease, and especially fatty liver disease, which has been threatening to become a serious public health concern. In this area, Eran Segal from the Weizmann institute (Rehovot, Israel) has been making some major contributions.

I am also constantly amazed by the work being done in Rob Knight’s group at University of California, San Diego (UCSD; USA). Additionally, their openness with data and analytic tools has been a driver of innovation throughout the field. They are truly going beyond simply making discoveries themselves and facilitating them throughout the field. At a recent meeting, Knight presented data tracking how the intestinal microbiota in an individual with inflammatory bowel disease changed in relation to their disease state; he was able to show how the differences between a healthy and disease state in this individual were larger than what one would expect to see between any two healthy individuals tested in this large, population-based study. They were able to show this because Knight and his group have a mastery of not only the data but are also constantly innovating in methods to present these complex datasets.

What are you excited to see over the next 10 years?

In the short term, I am looking forward to improved accuracy and reproducibility in microbiome studies. I am also looking forward to a shift in the bioinformatics field towards more self-contained applications using systems like Docker. The two biggest problems I see now in bioinformatics are software with poor documentation and software with difficult dependencies. This new approach would solve the latter problem almost overnight.

Also in the short term, the use of microbiome analysis is going to play a large role in the diagnosis of infectious disease. Previously, there were only two scenarios where culturable microbes could be easily identified as pathogenic culprits. The first of these was detection microbes that do not belong in/on the host at all—such as Y. pestis—the detection of which in a host immediately suggests plague. The second scenario was microbes out of place—such as intestinal bacteria in the urinary tract— a common cause of urinary tract infection. A newer scenario that becomes much more tractable with the development of quantitative microbial analysis is microbes out of balance, such as an overgrowth of a Candida. This scenario is much harder to identify, as the causative microbes may be expected in the affected location, but not in the quantities observed. Presently, these techniques are already being employed in veterinary practice to great effect in diagnosing infection and guiding treatment and should be deployed to the human clinics soon.

In the longer term, disease surveillance will be another big area, I believe. This will go beyond the traditional bacteria and require true metagenomics getting into fungi and viruses, but the power of this for monitoring emerging infectious disease is tremendous. As we are currently experiencing with the coronavirus outbreak, emerging infectious disease is an important area of research with tremendous consequences, and forewarned is forearmed.

There are also several groups that have extremely promising studies in progress, some of whom have created commercial spin-offs to build upon their success. As I mentioned before, Segal has carried out several studies on the interaction between the host, the microbiome, food, and health. I previously mentioned Knight’s group at UCSD, and they have several interesting ongoing projects looking at everything from human microbiomes to the microbiome of buildings and environmental microbiomes. Mason, whom I mentioned earlier for the review of shotgun metagenomic tools, has a project looking at subways around the world to track the spread of pathogenic microbes as well as understand how the microbial environment of these synthetic ecosystems is affected by the humans for whom they were designed. Some of this technology is being applied by a start-up he co-founded called Biotia (NY, USA) with the goal of decreasing nosocomial infections. Additionally, Zymo Research technologies have been licensed to a start-up called MiDog (CA, USA) to apply NGS to diagnosing infections in animals (I am not a part of MiDog, but I have used their test on my own dog).