Advances in AI and neuroimaging combine to slay stroke

How have developments in AI and neuroimaging combined to improve the diagnosis and treatment of stroke?

Stroke is one of the leading causes of adult disability and death worldwide and, until recently, was completely untreatable. When Greg Albers, Director of the Stroke Center at Stanford University (CA, USA), began working at Stanford in 1984 as a young neurologist, it was these factors that drew him into the study of stroke, invigorated by the prospect of a scientific issue of Goliath proportions with seemingly few gaps in its armor.

The initial treatments for stroke were based around the assumption, inveterate in the neurological community at the time, that strokes evolve quickly and that after a few hours the damage done is irreversible. In the early 1990’s after some new imaging studies developed at Stanford revealed to Greg that strokes can evolve over a matter of days, not hours and that there was a great degree of variation between different strokes, he realized that things needed to change.

Here, Greg reveals how advances in imaging and AI over the last decade have led to an overhaul in the diagnosis and treatment of stroke. Taking the power away from the stopwatch and instead giving the physicians the ammunition with which to load their slingshot and take down the Goliath of stroke.

Please introduce yourself and tell us about your institution?

Please introduce yourself and tell us about your institution?

My name is Greg Albers. I’m the Director of the Stroke Center at Stanford University (CA, USA), which I founded with Gary Steinberg in 1992. In addition to my work at Stanford, I’m the cofounder of iSchemaView, which is a company that uses software that was initially developed by our group at Stanford, named RAPID (Rapid Processing of Perfusion and Diffusion), for brain image analysis.

In your opinion, what are some of the biggest advances in the field of diagnostic neuroimaging in the last decade?

For me, the biggest advance is to be able to identify salvageable tissue in the brain, specifically for stroke patients. The way stroke used to be treated was based on a stopwatch approach – if you were within 4.5 hours you would get treated and if you weren’t, the assumption was there was no brain tissue to save. Developments in imaging have enabled us to see that every stroke is different, allowing us to identify if a patient has any salvageable tissue when they arrive at the hospital. If they do, then we can focus on restoring blood flow to the brain to treat them. Based on this imaging we have expanded the treatment window for stroke from 3-6 hours after the onset of symptoms, all the way up to 24 hours.

What technical developments have allowed you to begin to identify that salvageable tissue?

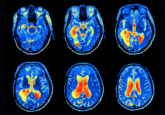

The first was what’s called perfusion imaging, which can be done either on a CT scan or an MRI scan. Perfusion imaging allows us to visualize the blood flow to the brain. With these blood flow measurements, we can identify tissue that is likely going to die while also identifying tissue that’s already been irreversibly injured. Essentially, you’re looking at tissue at risk as well as how much brain tissue is dead. This information is needed immediately to make treatment decisions, so we needed rapid processing to interpret it.

That was the second development, the RAPID software. This software takes the image results from the perfusion imaging scans and, within a couple of minutes, produces a map that indicates what is dead in pink and what is salvageable in green. Using that information, we did a couple of large clinical trials to demonstrate that a favorable profile – in other words, a small dead area and a large salvageable area – could be successfully treated up to 24 hours. This led to changes in international guidelines in 2018. So that opened up stroke treatment to a much-much larger group of patients than couldn’t be treated before, and it has had a huge impact on reducing death and disability from stroke.

Anne Carpenter on artificial intelligence in the cell imaging field

Anne Carpenter on artificial intelligence in the cell imaging field

At ASCB 2019, BioTechniques sat down with the Broad Institute’s Anne Carpenter to discuss her work in cell imaging software and how artificial intelligence is revolutionizing the field.

Has this technology and these advances been able to affect any other chronic brain conditions?

There are also potential uses in the treatment of aneurysms, weak spots on a blood vessel that can rupture and cause brain hemorrhage that can be fatal. They cause a huge amount of disability. The RAPID software is to be able to identify those automatically, as opposed to depending on radiologists to read and identify them after a scan, as is the current protocol. Sometimes radiologists are less experienced at detecting aneurysms. The software can automatically detect aneurysms and track their size to see if they are growing, which indicates an aneurysm is likely to hemorrhage.

As more information from diagnostic scan becomes available, how can that information be processed to improve outcomes for patients?

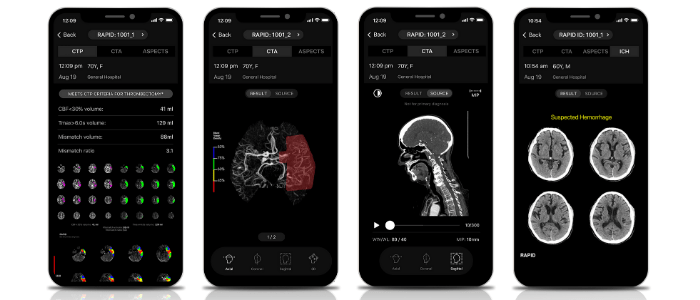

A lot of machine learning/artificial intelligence is being added to the process. We started with CT perfusion, but now we’ve moved on to automatically identifying large vessel occlusions, aneurysms and even the early signs of stroke with something called an ASPECTS score. This is all based on machine learning and the more data and information that these systems get, the more accurate they become.

When a patient comes into the emergency room who has stroke symptoms, we can run a variety of these AI-based scans. These scans can answer a lot of our questions: Does the patient have salvageable tissue? Do they have a brain hemorrhage? Do they have a large blood vessel occlusion? Do they have an aneurysm? We can get a comprehensive idea from computer-based algorithms to help make a diagnosis in the patient.

If you could ask for anything to improve the field of diagnostic neuroimaging, what would it be?

It is a field that is moving very quickly. We are looking for faster computation times right now. Most of these programs take about 2-3 minutes to give you an answer which is fast but we’re constantly trying to speed it up. Can we get it in 30 seconds rather than 2 minutes? These could be vital seconds for the patient.

The other thing that is helpful for designing these AI-based software is to have very large databases. To train AI to identify a brain hemorrhage, you feed 500 or more scans of hemorrhages into a network where you have outlined the hemorrhage and another 500 that don’t have a hemorrhage. Then the software learns to recognize what a hemorrhage looks like. The programs become more and more accurate the more data that comes in.

Where do you see the field in 5 years’ time?

Five years is a long way ahead for a field that’s making big advances every few months but I would like to see it expand into a large number of disease processes. The biggest advances have been in the stroke field but I can imagine patients coming in and having a scan analyzed by AI looking for a wide variety of diseases. It’s tipping off the physician of different pathologies that can occur. The ones that are done right now, brain hemorrhage, aneurysm, ischemic stroke, large-vessel occlusion, that’s a small number of the total diseases that affect humans. Having more programs that can detect more diseases is where I believe the field will be in 5 years’ time.

It’s important to highlight that these programs are to assist the physician, not replace them. Medicine is very complicated. There are all sorts of variants and different disease processes, so I don’t think we will ever get to the point where the software takes over the role of a physician. It is the human intelligence of a doctor, who knows the patient’s goals and values, combined with the artificial intelligence that results in the best possible clinical decisions for the patient.

AI is here to supercharge pathologists, not replace them

AI is here to supercharge pathologists, not replace them

Artificial intelligence and automation have started to replace jobs in some industries. However, despite all the fear mongering, healthcare should not be lumped into this group.

As AI for neuroimaging advances so rapidly, what ethical issues do you see beginning to present themselves?

Well, one that I alluded to is that some people think of AI software as 100% accurate and that it provides a definitive diagnosis. There is no AI tool that has a 100% sensitivity and specificity. In other words, there will be some cases that will be missed by the AI software, and there will be cases that are false positives. The AI software may indicate a suspected hemorrhage but on inspection, a physician may see that the software has been fooled by something that looked like a hemorrhage but isn’t. This could result in medicolegal issues: if you have been misdiagnosed by a software, who is liable for that?

The key point here is not to depend on the computer 100%, kind of like the self-driving car. You want a human there to be able to override it if there is a problem. You don’t want to fall asleep at the wheel and have the car drive into a wall.

Do you consider that risk, of physicians ‘falling asleep at the wheel’ as technologies and AI continue to get better, to be a growing likelihood?

I think there is that risk. Every software has specifications. Certain things that it can and can’t do. If the physician is not trained to understand what the limitations of the software are, they potentially could make mistakes. We put a lot of effort into training physicians to understand what the specifications and limitations of the software are.

When you look at the intersection of AI and neuroimaging, what aspect of it excites you the most?

The exciting part is when the AI leads to improvement in patient outcomes. You can do virtually anything with AI but the practical part is where it leads to making patients better. That’s what excites me the most, just trying to find applications that are actually going to improve patient outcomes, make a doctor’s life easier and make the patient’s outcome better.

Another fantastic development is that with this software, you can now get all of this information straight to your phone in an app. That means if I’m out of the hospital and someone comes into the emergency department with a stroke, I can view all of that imaging information and make decisions immediately, wherever I am. Not only does this increase the speed of treatment for patients but it improves doctors’ quality of life because we don’t have to rush into the hospital for false alarms.